Open source, or just the assurance of knowing there’s a fire exit nearby? What's more important to a museum?

I've never really got into the debate about open source software because, well, I can't really get into it. Sure, there are philosophical aspects that ring my bell a little bit, and there are pragmatic aspects too, but I don't have a feeling that using open source software is the only morally acceptable answer so, overall, I'm ambivalent. Ambivalence (or nuance?) makes for poor polemic, which OSS advocates never lack.

All the same it does often nag at me that I should bring together the strands of my thoughts, if only because the cheerleaders of open source software have such an easy case to make! And on the contrary side, those that use less open alternatives sometimes seem obliged to take oppositional defensive stances attacking OSS on principle: an equally bullshit attitude.

Clearly there is a philosophical question about the right of software developers to own and limit access to software; a right which is in direct opposition to OSS. Personally I think there's nothing morally wrong with asking people to pay for something you made, although obviously I think there's plenty that’s morally right about giving it away! There are people who think that buying software from a company is Inherently Wrong, or that buying it without having the right and ability to modify the source-code is objectionable and a dereliction of duty. I think merely that it's a bad idea quite a bit of the time. I think this, not because it's immoral to pay for software that you can't do with exactly as you will, but because practically speaking it may expose you to costs or risks that you should try to avoid.

So bollocks to the principle, what about the practical? At least that lets us have a discussion. There’s the question of having access to the source code, as well as permission to mess with it. Now I don’t know about you, but for me this has never been a strong reason to use Linux. I have less than zero intention or likelihood of getting under the bonnet of any flavour of Linux and messing with the source, any more than I’d learn C in order to rewrite PHP itself for my own idiosyncratic needs. The further up the stack you come, the more likely I am to want access to the source – there’s perhaps a 10-20% chance we at IWM might have to mess with the Drupal core at some level (undesirable as that may seem), as we implement our new CMS; and if I can ever get my head round Java I might even one day try to contribute to the development of Solr (realistically that’s more like a 1% chance). So it’s all about what bit of the stack you’re talking about, but much of the time diving into the source is only a very theoretical possibility.

Of course freely-licensed source code also means that others can tinker with it, and this may be a good thing – someone else can make you your personalised Linux distro, perhaps? Also, for better or worse, you do tend to see products fork. Sometimes this is managed well by packaging up the diversification in modules that share a common core – this can become a bit of a nightmare of conflict management, but it’s got its merits too. Again, PHP or Drupal are examples. If nothing else it’s a nice fall-back if you know that the source is open, just in case the product is dropped by its owner (or community). And on occasion closed code has been thrown open, sometimes with great success – see Mozilla, which emerged from Netscape and took off.

How about the community? Well as usual this is a multi-level thing. If you want a community of support for the users of a product, this is not just the preserve of open source. Sometimes OSS develops a huge community of users contributing extensions, plugins etc, and sometimes it doesn’t, and it’s the same for non-OSS. Lots of Adobe or Microsoft products and platforms have massive communities around them and the sheer volume of expertise, of problems-already-solved, around the implementation or use of a product might be more important than the need to change the product itself. Once again, it’s about what you or your organisation really needs, and the potential cost of compromise.

How about the question of ownership? If a commercial company has control over the roadmap and licensing terms of some mission-critical software are you not exposing your organisation by using it? Perhaps, yes, and sometimes it’s clear that OSS can buffer you from this risk, but other times we can get a nasty surprise. MySQL is now owned by Oracle. A bunch of changes to the licence happened this autumn, and whilst Oracle might be positioning MySQL for a more enterprise-scale future its suddenly possible to pay an awful lot for a licence, depending upon your needs. And Java? Sun owns this. It’s meant to be open source too, but ownership of the standard is complex and there’s an almighty struggle going on, with the Apache Foundation finding itself suddenly looking quite weak in the battle for control of the language that underlies most of its flagship products. And now we wonder, is even Linux going to end up open to a Microsoft attack? Some people worry that it could, following the sale of Novell. Symbian has been deserted by all its partners and is now being killed off by Nokia. If you want the Symbian source so you can plough your own furrow/fork your own eye-balls, you’d better grab it about now.

This is simply to say: the merits of OSS aren’t uniformly distributed, they’re not necessarily always of great importance in a given scenario, and sometimes they may not be worth the cost of those benefits. Cost and benefit is what should matter to a museum, not the politics of software development and ownership.

To be fair I should also mention the downsides of non-open sourced software, but for expediency I’ll just say: potentially any and all of the risks for OSS mentioned above and some more.

For instance, although it’s a truism to say that what is good for the consumer is good for business it’s not that simple - business. I need only say “DRM” to make the point (although where it’s been foisted upon music consumers they have voted against it and seen a partial roll-back) [edit 14/12/10: semi-relevant XKCD image follows]

The noxious Windows verification software which stops you from changing the configuration of your machine extensively – who asked for that except for Billg? A road-map determined by profit maximisation therefore doesn’t always seem to coincide with what the users want – see also Apple’s slow striptease of technology when they introduce some new gizmo-porn for an example. You want expandable memory with that? GPS? Video? You’ll have to wait a generation or two, perhaps forever.

Then there’s buying licences. At MoL we used Microsoft’s CMS2002, and in truth I’d had relatively little problem with the source-code being closed – it seemed to do what we wanted, had the power of .Net available to extend it, and there was little obvious need to mess with the “closed” parts. We got the software at a trivial price (about 1% of Percussion’s ticket price at the time, I think), but that wasn’t to last. CMS2002 was initially marketed alongside SharePoint Portal Server, but in due course the two were integrated into MOSS2007. When CMS2002 was taken off the market the only way to buy a licence for it was to get a MOSS2007 licence, and this (being a much bigger application) was about 10 times the price. Still cheap compared to much paid-for competition but no longer trivial. I wouldn’t have wanted to use MOSS2007 even if we had the resources to migrate the code but that’s what we had to buy and then downgrade the licence. I’d never even thought about the issue of licences being taken off the market, but of course if you want to add another production server (as we did) you have to buy what they’re selling. In principle they could have chosen not even to sell us an alternative or asked whatever they liked. Now that was a lesson for me, and that’s a genuine reason for avoiding products where licenses are at the discretion of a vendor.

So what really matters?

OK, so there’s a debate to be had over when it’s right and important to go open source. Is it a tool, or a platform? Does it offer you a fire-exit so you can take your stuff elsewhere? What’s the total cost of ownership? Have you sold your soul? And blah blah blah.

Most of the time, though, a more pertinent question is how one procures custom applications. Throughout my time as a museum web person I have always sought to ensure that when a custom application was built for us we required the source code (and other files) and the right to modify, reuse and extend that code. We didn’t necessarily require a standard open source licence (difficult for all sorts of reasons), but enough openness for us to remain, practically speaking, independent of the supplier once the software was delivered. On rare occasions pragmatism has compromised this – for instance, when we used System Simulation’s Index+ to power Museum of London’s Postcodes site – with mixed results. In the latter case, we got a great application but one that we couldn’t really support ourselves nor afford to have SSL support adequately (a side effect of project funding).

The wrap

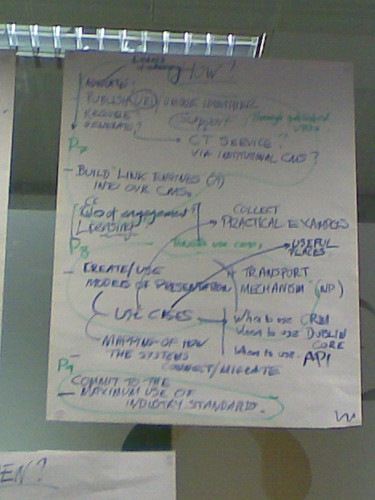

So, when should a museum use open source software? I don’t have the answer to that, but I do say that if there’s a principle that museums should follow it’s the principle of pragmatic good management, and the politics or ethical “rightness” of (F)OSS should give way to this. I’d always try to get custom applications built and licensed in a way that enables other people to maintain and extend them. And I’d select software or platforms by taking a list that looks something like the following and seeing how it applied to that specific software, to my organisations own situation and to the realities of what we’d want to be able to do with the software in the expected lifecycle of the system.

OSS goodness:

- Access to the source so you can fiddle with it yourself

- Access to the source so others can fiddle and you aren’t in thrall to a software co.

- Free stuff

and potentially:

- A community of like-minded developers working together to make what you, the users, want

- Greater sustainability?

- lack of roadmap or leadership

- lack of clear ownership

- lack of support contracts

- poorly integrated code, add-ons/modules etc

proprietary goodness:

- all the stuff OSS can be bad at...

- sometimes, great communities of users and people who develop on top of the software

Finally, stuff I like to see but which may be present or absent from either open or proprietary software:

- Low total cost of ownership

- Known life-cycle/roadmap for several years ahead

- A clear exit strategy: open standards, comprehensive cross-compatible export mechanism

- Flexible licence, ability to buy future licences!

- Ability to change the code...sometimes

- Confidence that, if the version I want to use gets left behind, I’ll be able to take it forward myself, or do so with a bunch of others

- Not-caringness of the software: if it’s something I could do with one tool or another and really not care, then I’m free to migrate as I please. I don’t care that, say, PhotoShop isn’t open source because if it doesn’t do what I want or I can’t afford to put it on my new workstation I’ll use something else, and nothing too bad will happen

Jesus. Can I blame ambivalence for my long-windedness? Please don’t answer that.

P.S. At IWM we are shifting to a suite of open-source applications for our new websites. Some are familar to me, some entirely new, but it's a good time to make the jump - a clean slate. I'm very excited.